AKAZEによる局所特徴量を用いて物体を検出する

OpenCVのA-KAZEで特徴量を算出し物体を検出する。

(この日本語で合ってんのか不安)

サンプルコード

検出した物体に緑の四角い枠を描くのが大変だった。

■サンプルコード

#include <windows.h>

#include <iostream>

#include <opencv2/opencv.hpp>

int main()

{

// 画像読み込み

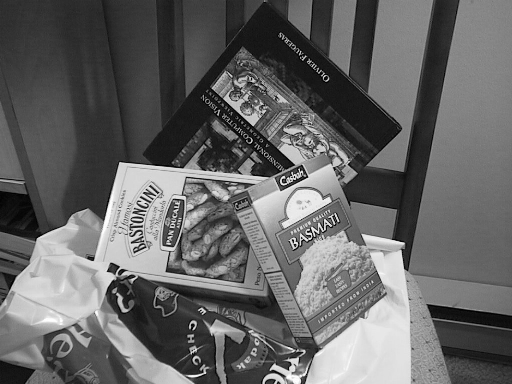

cv::Mat source_image = cv::imread("box_in_scene.png");

if (source_image.empty()) {

std::cout << "Error." << std::endl;

return -1;

}

cv::imshow("source", source_image);

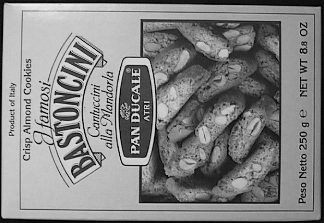

cv::Mat template_image = cv::imread("box.png");

if (template_image.empty()) {

std::cout << "Error." << std::endl;

return -1;

}

cv::imshow("template", template_image);

// AKAZE

try {

std::vector<cv::KeyPoint> source_keypoints;

std::vector<cv::KeyPoint> template_keypoints;

cv::Mat source_descriptors;

cv::Mat template_descriptors;

// cv::Ptr<cv::AKAZE> akaze = cv::AKAZE::create(cv::AKAZE::DESCRIPTOR_MLDB, 0, 3, 0.001f);

cv::Ptr<cv::AKAZE> akaze = cv::AKAZE::create();

akaze->detectAndCompute(source_image, cv::noArray(), source_keypoints, source_descriptors);

akaze->detectAndCompute(template_image, cv::noArray(), template_keypoints, template_descriptors);

// 特徴点を書き出して表示

cv::Mat source_keypoints_image;

cv::Mat template_keypoints_image;

cv::drawKeypoints(source_image, source_keypoints, source_keypoints_image, cv::Scalar::all(-1), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

cv::drawKeypoints(template_image, template_keypoints, template_keypoints_image, cv::Scalar::all(-1), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

cv::imshow("source keypoints", source_keypoints_image);

cv::imshow("template keypoints", template_keypoints_image);

#if 1

cv::BFMatcher matcher(cv::NORM_HAMMING);

std::vector<std::vector<cv::DMatch>> nn_matches;

matcher.knnMatch(template_descriptors, source_descriptors, nn_matches, 2); // テンプレート画像が第一引数なので注意

#else

// FLANNBASEDは精度が悪かった

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create(cv::DescriptorMatcher::MatcherType::FLANNBASED);

std::vector<std::vector<cv::DMatch>> nn_matches;

if (template_descriptors.type() != CV_32F) {

template_descriptors.convertTo(template_descriptors, CV_32F);

}

if (source_descriptors.type() != CV_32F) {

source_descriptors.convertTo(source_descriptors, CV_32F);

}

matcher->knnMatch(template_descriptors, source_descriptors, nn_matches, 2);

#endif

std::cout << "nn_matches.size()=" << nn_matches.size() << std::endl;

// 両方の特徴量から近いところををマッチングさせる

const float ratio_thresh = 0.5f; //

std::vector<cv::DMatch> good_matches;

for (size_t i = 0; i < nn_matches.size(); i++)

{

if (nn_matches[i][0].distance < ratio_thresh * nn_matches[i][1].distance)

{

good_matches.push_back(nn_matches[i][0]);

}

}

cv::Mat matches_image;

cv::drawMatches(template_image, template_keypoints, source_image, source_keypoints, good_matches, matches_image, cv::Scalar::all(-1), cv::Scalar::all(-1), std::vector<char>(), cv::DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

std::vector<cv::Point2f> obj;

std::vector<cv::Point2f> scene;

std::cout << "good_matches.size()=" << good_matches.size() << std::endl;

for (int i = 0; i < good_matches.size(); i++)

{

//-- Get the keypoints from the good matches

obj.push_back(template_keypoints[good_matches[i].queryIdx].pt);

scene.push_back(source_keypoints[good_matches[i].trainIdx].pt);

}

// 見つけた場所に四角を描く

if (good_matches.size() > 2) {

cv::Mat H = cv::findHomography(obj, scene, cv::RANSAC);

if (!H.empty()) {

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<cv::Point2f> obj_corners(4);

obj_corners[0] = cv::Point2f(0.0, 0.0);

obj_corners[1] = cv::Point2f((float)template_image.cols, 0.0);

obj_corners[2] = cv::Point2f((float)template_image.cols, (float)template_image.rows);

obj_corners[3] = cv::Point2f(0.0, (float)template_image.rows);

// □で囲む

std::vector<cv::Point2f> scene_corners(4);

cv::perspectiveTransform(obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

cv::line(matches_image, scene_corners[0] + cv::Point2f((float)template_image.cols, 0.0), scene_corners[1] + cv::Point2f((float)template_image.cols, 0.0), cv::Scalar(0, 255, 0), 4);

cv::line(matches_image, scene_corners[1] + cv::Point2f((float)template_image.cols, 0.0), scene_corners[2] + cv::Point2f((float)template_image.cols, 0.0), cv::Scalar(0, 255, 0), 4);

cv::line(matches_image, scene_corners[2] + cv::Point2f((float)template_image.cols, 0.0), scene_corners[3] + cv::Point2f((float)template_image.cols, 0.0), cv::Scalar(0, 255, 0), 4);

cv::line(matches_image, scene_corners[3] + cv::Point2f((float)template_image.cols, 0.0), scene_corners[0] + cv::Point2f((float)template_image.cols, 0.0), cv::Scalar(0, 255, 0), 4);

// cv::minAreaRectを使えばRotateRectを受け取ることができるのでそちらも検討

}

}

//-- Show detected matches

cv::imshow("Good Matches & Object detection", matches_image);

}

catch (const std::exception& e) {

std::cout << e.what() << std::endl;

}

cv::waitKey();

return 0;

}

■実行結果

nn_matches.size()=383

good_matches.size()=11

以上。